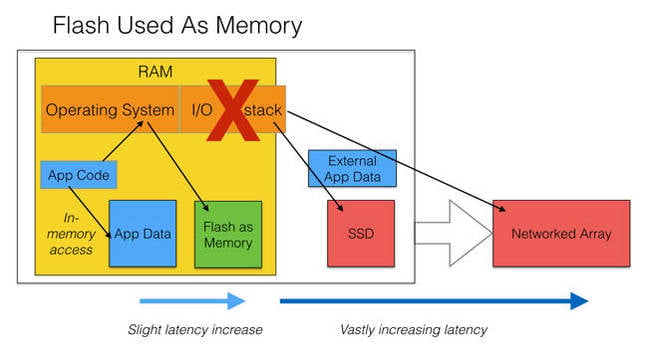

FaME tries to solve this by accessing SSDs in way that mimics access to RAM, skipping the SCSI stack and driving down latency. This begins to close the DRAM-SAN gap, achieving latencies in the 500-900ns range. There are a whole host of ways to accomplish this.

- NVMe is one, essentially a PCI-slot compatible SSD. Pop it in a server and it acts like DRAM. The disadvantage here is you've re-introduced the problems the SAN/NAS was introduced to solve: stranded capacity, lack of data protection features (snapshots, replication), and you'll need to come up with a way to make this NVMe available to multiple nodes for parallelization and redundancy. Think Fusion-IO, which also required significant re-coding of applications.

- RDMA over Converged Ethernet (RoCE), also known as RDMA over IB/Ethernet.

- RoCE Infiniband has port-to-port latencies ~100ns

- RoCE Ethernet has port-to-port latencies ~230ns and RoCE v2 is routable. This is a link-layer protocol, however RoCE v2 is not supported by many/all OS's yet.

- iWARP is a protocol that allows RDMA wrapped in a packet for a stateful protocol like TCP.

- Memcached is an open-source way for your servers to use other servers as DRAM extension. I'm a bit fuzzy on whether it simply uses the second server as a place to put part of your current working set or if it offloads portions of the computation as well. In any case, here's a good explanation.

- HDFS, key value store semantics and other protocols: this may be the smartest way to do things, just let the application speak directly to a storage array the same way it would speak to RAM.

|

| Courtesy of http://www.theregister.co.uk/2015/05/01/flash_seeks_fame/ |

Looks like FaME will be a good price/performance solution until we develop a super-cheap static RAM.

No comments:

Post a Comment